RL_Attention

RL_LSTM

Understanding LSTM — a tutorial into Long Short-Term Memory Recurrent Neural Networks

Paper SourceJournal:Year: 2019Institute:Author: Ralf C. Staudemeyer, Eric Rothstein Morris#Long Short-Term Memory #Recurrent Neural Networks

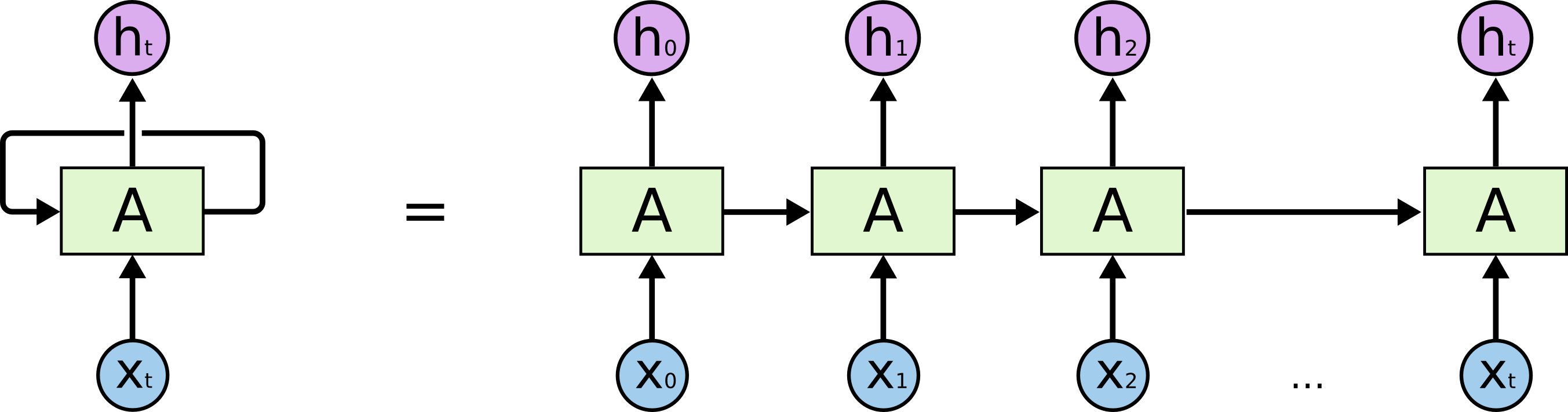

Recurrent Neural Network

An RNN model is typically used to process long sequential data like video, audio, etc. A simple RNN looks like:

However, simple perceptron neurons that linearly combine the current input element and the last unit state may easily lose the long-term dependencies. Standard RNN cannot bridge more than 5–10 time steps. This is due to that back-propagated error signals tend to either grow or shrink with every time, which makes it struggle to learn. There is where LSTM comes into the picture.

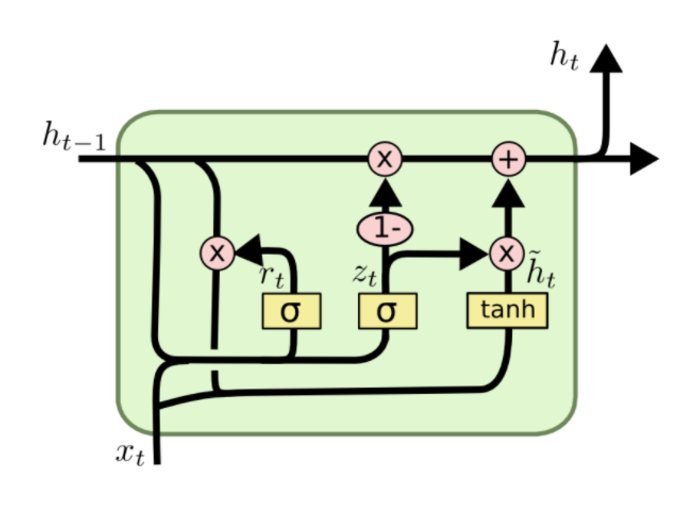

LSTM Networks

LSTMs is the short name of Long Short Term Memory networks that is explicitly designed to avoid the long-term dependency problem.

We can see from the above picture that it has multi-neural network layer in each recurrent unit.

Reference

RL_OpenAI_Five

RL_Paper_05:Hierarchical RL

META LEARNING SHARED HIERARCHIES

Paper SourceJournal:Year: 2017Institute: OpenAIAuthor: Kevin Frans, Jonathan Ho, Xi Chen, Pieter Abbeel, John Schulman#Deep Reinforcement Learning #Meta Learning

RL_Paper_04:PPO

Proximal Policy Optimization Algorithms

Paper SourceJournal:Year: 2017Institute: OpenAIAuthor: John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, Oleg Klimov#Deep Reinforcement Learning #Policy Gradient

PPO(Proximal Policy Optimization), a much simpler to implement, better sample complexity, policy gradient method that has a novel objective with clipped problability ratios, which forms a pessimistic estimate of the performance of the policy ($\theta_{old}$ is the vector of policy parameters before the update).

RL Weekly 1

Unsupervised State Representation Learning in Atari

[Paper] [Code]Journal: NeurIPSYear: 2019Institute: Mila, Université de MontréaAuthor:Ankesh Anand*, Evan Racah*, Sherjil Ozair*#State Representation Learning #Constrastive Self-Supervised Learning

State representation learning without supervision from rewards is a challenging open problem. This paper proposes a new contrastive state representation learning method called Spatiotemporal DeepInfomax (ST-DIM) that leverages recent advances in self-supervision and learns state representations by maximizing the mutual information across spatially and temporally distinct features of a neural encoder of the observations.

RL_Paper_03:Rainbow

Rainbow: Combining Improvements in Deep Reinforcement Learning

Paper SourceJournal: AAAIYear: 2017Institute: DeepMindAuthor: Matteo Hessel, Joseph Modayil, Hado van Hasselt#Deep Reinforcement Learning

Abstract

This paper examines six main extensions to DQN algorithm and empirically studies their combination. (It is a good paper which gives you a summary of several important technologies to alleviate the problems remaining in DQN and provides you some valuable insights in this research region.)Baseline: Deep Q-Network(DQN) Algorithm Implementation in CS234 Assignment 2

RL_Paper_02:DQN

Human-level control through deep reinforcement learning

Paper SourceJournal: NatureYear: 2015Institute: DeepMindAuthor: Volodymyr Mnih1*, Koray Kavukcuoglu1*, David Silver1*#Deep Reinforcement Learning(DRL)

Abstract

To use reinforcement learning successfully in situations approaching real-world complexity, however, agents are confronted with a difficult task: they must derive efficient representations of the environment from high-dimensional sensory inputs, and use these to generalize past experience to new situations.Remarkably, humans andother animals seem to solve this problem through a harmonious combination of reinforcement learning and hierarchical sensory processing systems, the former evidenced by a wealth of neural data revealing notable parallels between the phasic signals emitted by dopaminergic neurons and temporal difference reinforcement learning algorithms.RL_Paper_01:Self-Regulated Learning

Aerobatics Control of Flying Creatures via Self-Regulated Learning

Paper SourceJournal: ACM Transactions on GraphicsYear: 2018Institute: Seoul National University, South KoreaAuthor: JUNGDAM WON, JUNGNAM PARK, JEHEE LEE*#Physics-Based Controller #Deep Reinforcement Learning(DRL) #Self-Regulated Learning

Abstract

Self-Regulated Learning (SRL), which is combined with DRL to address the aerobatics control problem. The key idea of SRL is to allow the agent to take control over its own learning using an additional self-regulation policy. The policy allows the agent to regulate its goals according to the capability of the current control policy. The control and self-regulation policies are learned jointly along the progress of learning. Self-regulated learning can be viewed as building its own curriculum and seeking compromise on the goals.