System Design

In a system design interview, you should at least do the following 4 tips:

- Ask good questions: what feature to work on? how much to scale?

- To figure out which feature he cares about and which feature he doesn’t, and make sure the finally feature set is small, then go deep into this feature set.

- How much data you need to store in the database, how many requests per second(QPS) you need to handle, and what kind of latency is expected.

- Don’t use buzzwords.

- Clear and organized thinking.

- Dirve discussions(80-20 rule): you should be talking 80% of the time and the interviewer should be talking 20% of the time.

The basic features which is required in a system design.

- Features: figure out what features does the interviewer need

- Define APIs

- Availability: when a power cut or your datacenter goes down, if your service is still available.

- Latency Performance

- Scalability: it going to be scaled as we add more users or more requests

- Durability: data can be stored in a database securely and data is not lost and data is not compromised.

- Class Diagram(Object Oriented Principles)

- Security & Privacy

- Cost Effective

Important concepts that you need to know.

Vertical vs Horizontal Scaling

- Vertial: add more memory, CPU, and hard drive to an existing host (single point failure)

- Horizontal: keep the host small and add more hosts (distributed system)

CAP Theorem

- CAP Theorem is a concept that a distributed database system can only have 2 of the 3: Consistency, Availability and Partition Tolerance.

- Consistency(一致性): all nodes see the same data at the same time. Simply put, performing a read operation will return the value of the most recent write operation causing all nodes to return the same data.

- Availability(可用性): every request should gets a response on success/failure, regardless of the state of any individual node in the system.

- Partion Tolerance(分区容限): can sustain any amount of network failure that doesn’t result in a failure of the entire network.

ACID vs BASE

- ACID: Atomicity, Consistency(Strong), Isolation, and Durablity, which is used for relational database.

- BASE: Basically Available, Soft State, and Eventual Consistency, which is used for NoSQL database.

Partitioning / Sharding Data

Database Sharding

A database shard is a horizontal partition of data in a database or search engine. Each individual partition is referred to as a shard or database shard. Each shard is held on a separate database server instance, to spread load.

Some data within a database remains present in all shards,[notes 1] but some appears only in a single shard. Each shard (or server) acts as the single source for this subset of data.

In Sharding, one’s data is broken into two or more smaller chunks, called logical shards. The logical shards are then distributed across separate database nodes, referred to as physical shards.

Advantages of Sharding

- High Availability

- Faster queries response

- More write bandwidth(write data in parallel)

- Scaling out

Disadvantages of Sharding

- More complexity

- A heavier reliance on the interconnection between servers.

- When more than one shard must be searched, increased latency when querying.

- Rebalancing data when database hotspot.

Types of Sharding Architecture

- Key Based Sharding: by customer ID number, client application IP address, Country Zip code, etc.

- Range Based Sharding: sharding the data based on the range of given values.

- Directory Based Sharding: maintain a static lookup table which used to keep track of which shard holds which data..

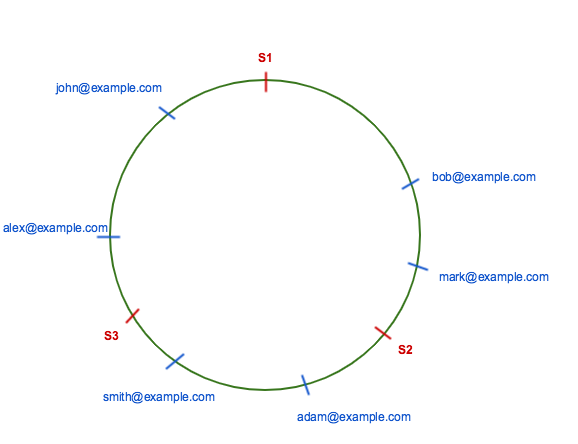

Consistent Hashing

Distributed Hashing

Use a hash table to distribute the requests to different servers: server = hash(key) modulo N (N = #servers).

Rehashing

When a server is crashed, the number of servers N = N - 1, then, all the requests should be rehashed, the server location for almost all requests changed, which will increase the load on origin in case of caching servers as there will be cache miss of keys and all of them needs to be rehashed.

Consistent Hashing

To solve the problem of rehashing, consistent hashing provides a distribution scheme which does not directly depend on the number of servers. All the requests and the servers are hashed by the same hash function to a abstract circle range, called hash ring. This allows servers and objects to scale without affecting the overall system.

To evenly distribute the load among servers when a server is added or removed, it creates a fixed number of replicas(known as virtual nodes by using several hash functions) of each server and distributed it along the circle.

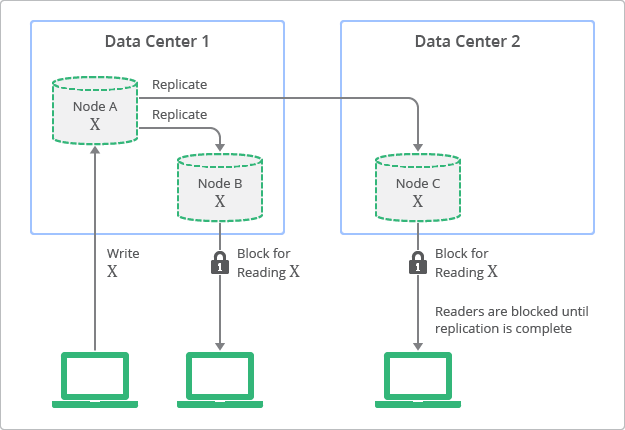

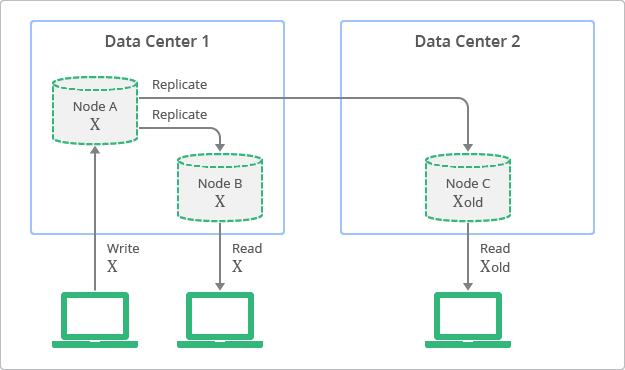

Optimistic vs Pessimistic Locking

- Optimistic Locking: No locking, check before you commit the transaction, if the version number of that data is different before and after, just rollback and do the operate again.

- Pessimistic Locking: Lock the data beforehand until you commit the transaction.

Strong vs Eventual Consistency

- Strong consistency is the consistency in CAP.

- Eventual consistency provides higher Availability, like the famous DNS.

Relational DB vs NoSQL DB

- Relational DB provides all these nice ACID properties.

- NoSQL DB provides scales a little bit better and higher availability.

Types of NoSQL

- Key Value

- Wide Column

- Document Based: to store semi-structured data, XML..

- Graph Based

Caching

- Caching improves latency and can reduce the load on your servers and databases.

- Every node has its own caching so the cache data is not shared between nodes.

- Cache can’t be the source of truth; Cache data has to be pretty small(keep the data in memory).

Caching can be done at different level.

- Client Caching

- CDN Caching

- Web Server Caching

- Database Caching

- Application Caching: In-memory key-value store like Memcached and Redis

- Caching Database Queries: whenever you query your database, your store the result dataset in the cache.

- Caching Objects: store the data as an object as you do in your application code

Cache-Invalidation Methods:

- Cache-Aside: for read-heavy workloads, application checks the cache first, if cache-hit, return the result, if cache-miss, checks the data store.

- Read-Through: for read-heavy workloads, similiar as cache-aside

- Write-Through: an application uses the cache as the main data source to read and write. Cache sits between application and data store. The cache is responsible for reading and writing to the database. So that data in the cache is not stale.

- Write-Behind (Write-Back): for write-heavy workloads, data is first written to the cache and then asynchronously data is updated to the data store, improving write performance.

- Refresh-ahead

Message Queue

Microservices

Microservices are small, focused on doing one thing well and autonomous services that work together.

Data center/Racks/Hosts

Limited Recources: CPU/Memory/Hard Drive/Netword Bandwidth

Random vs Sequential Read/Write on the Disk

Http vs Http2 vs WebSockets

TCP/IP Model

IPV4 vs IPV6

TCP vs UDP

DNS Lookup

Https vs TLS

Public Key Infrastructure & Certivicate Authority

Symmetric vs Asymmetric Encryption

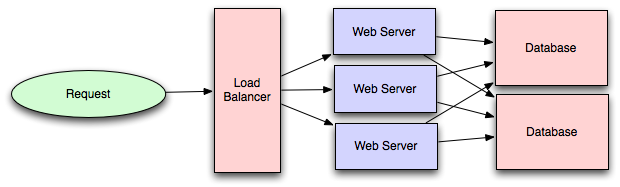

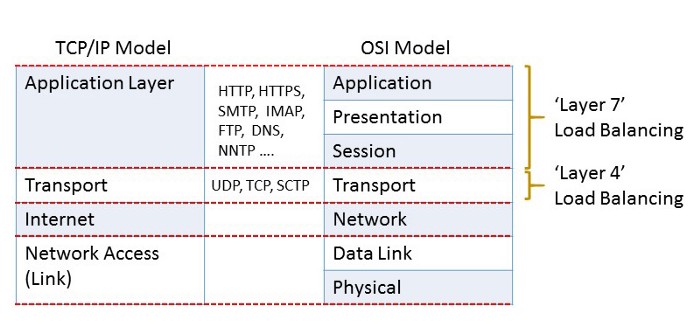

Load Balancer -> L4 vs L7

Load Balancing Algorithms:

- Round Robin: 循环队列

- Least Connections: Directs the traffic to the server with fewest active connections.

- Least Response Time: Directs the traffic to the server with fewest active connections and lowest average response time.

- Least Bandwidth Method: Directs the traffic to the server that is currently serving the least amount of traffic.(measured in megabits per second (Mbps))

- IP Hash

- Session persistence or Sticky Session: Directs all the request for a particular session to he same server that serviced the first request for that session.

Load Balancer Categories:

- DNS Round Robin

- L3/L4 Load Balancer: traffic is routed by IP address and TCP port. “Layer 4 load balancing” most commonly refers to a deployment where the load balancer’s IP address is the one advertised to clients for a web site or service (via DNS, for example). As a result, clients record the load balancer’s address as the destination IP address in their requests. Usually, it is a kind of hardware load balancer.

- L7 Load Balancer: traffic is routed by what is inside the HTTP protocol(application layer), such as HTTP header, uniform resource identifier, SSL session ID and HTML form data. Layer 7 load balancing enables ADC (Application Delivery Controllers) to redirect traffic more intelligently by inspecting content to gain deeper context on the application request. This additional context allows the ADC to not only optimize load balancing but to also rewrite content, perform security inspections and to implement access controls. And according to the requests’ content, to redirect those requests to different servers.

CDNs & Edge

Bloom Filters & Count-min Sketch

- Bloom filters are used to decide if an element is a member of set, it can have false positives but never have false negative.

- Count-min sketch is used to count the frequency of events. Keep the top k events amount a large dataset.

Paxos-Consensus over distributed hosts

- Leader Election

Design Pattern & Object Oriented Design

Virtual Machines & Containers

Publisher-Subscribe over Queue

- Customer facing requests should not be directly exposed to a Pub-Sub system. Encryption (e.g. Transport Layer Security (SSL/TLS)) can prevent unauthorized access.

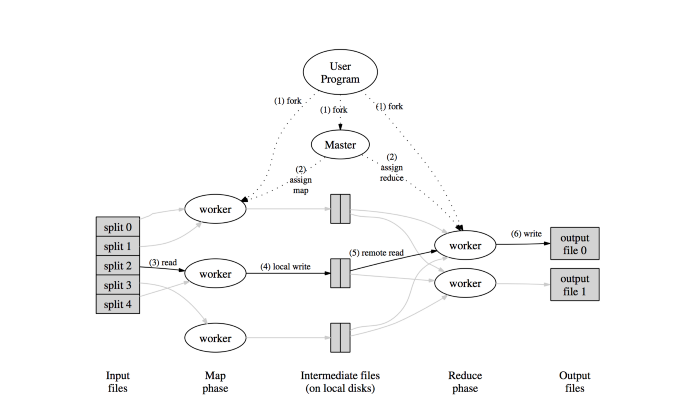

Map Reduce

- Map: Filter and sorting the data.

- Reduce: Summarizing the data.

- Splits the input file in M chunks and starts many copies of the program on a cluster of machines.

- Master picks idle workers and assigns each one a map task or a reduce task out of M map tasks and R reduce tasks.

- Map task worker reads and parses key/value pairs out of input split assigned and passes each key/value pair to user-defined map function. Output key/value pairs by map function are buffered in memory.

- Buffered pairs are partitioned in R partitions and written to the local disks periodically. Locations of these partitioned are passed to the master, who forwards these locations to reduce workers.

- Reduce workers are notified by the master. Intermediate data stored on local disks of map workers are read by reduce workers using remote procedure calls and sorted to group same key together.

- Reduce worker passes each unique intermediate key and set of values to reduce function. The output of the reduce function is appended to the final output file for this reduce partition.

Multi-Threading, Concurrency, Locks, Synchronization

Tools

- Cassandra: A wide column highly scalable database

- MongoDB/Couchbase: A NoSQL that provides ACID at a document level.

- MySQL: Support master-salve architecture and scales up well

- Memcached & Redis: Distributed cache.

- Zookeeper: Centralized configuration management tool. Also used for leader election and distributed locking, scales very well for the reads but not writes, and can only store small amount of data.

- Kafka: A fault tolerant highly available queue using publisher-subscriber or streaming application.

- NGINX & HAProxy: Load balancers

- Solr & Elastic Search: Search platform.

- Blobstore

- Docker

- Kubernetes

- Mesos

- Hadoop / Spark

- HDFS