Proximal Policy Optimization Algorithms

Paper SourceJournal:Year: 2017Institute: OpenAIAuthor: John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, Oleg Klimov#Deep Reinforcement Learning #Policy Gradient

PPO(Proximal Policy Optimization), a much simpler to implement, better sample complexity, policy gradient method that has a novel objective with clipped problability ratios, which forms a pessimistic estimate of the performance of the policy ($\theta_{old}$ is the vector of policy parameters before the update).

In policy gradient, the loss function is:

where $\pi_{\theta}$ is a stochastic policy and $\hat{A}_t$ is an estimator of the advantage function at timestep $t$.

While it is appealing to perform multiple steps of optimization on this loss $L^{PG}$ using the same trajectory, doing so is not well-justified, and empirically it often leads to destructively large policy updates.

In TRPO, the loss function becomes:

Now, in PPO, the loss function becomes:

This objective can further be augmented by adding an entropy bonus to ensure sufficient exploration, as suggested in past work. The final objective is:

where $c_1$, $c_2$ are coefficients, and $S$ denotes an entropy bonus, and $L^{VF}$ is a squared-error loss $(V_{\theta}(s_t) - V_t^{target})^2$.

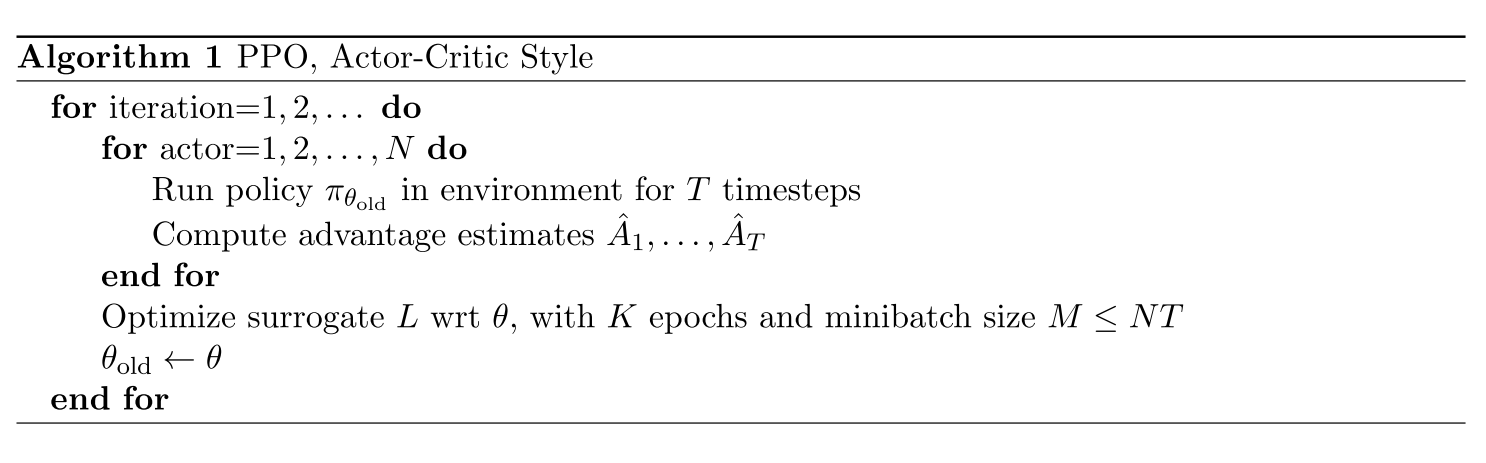

And the total algorithm is as follows: