Understanding LSTM — a tutorial into Long Short-Term Memory Recurrent Neural Networks

Paper SourceJournal:Year: 2019Institute:Author: Ralf C. Staudemeyer, Eric Rothstein Morris#Long Short-Term Memory #Recurrent Neural Networks

Recurrent Neural Network

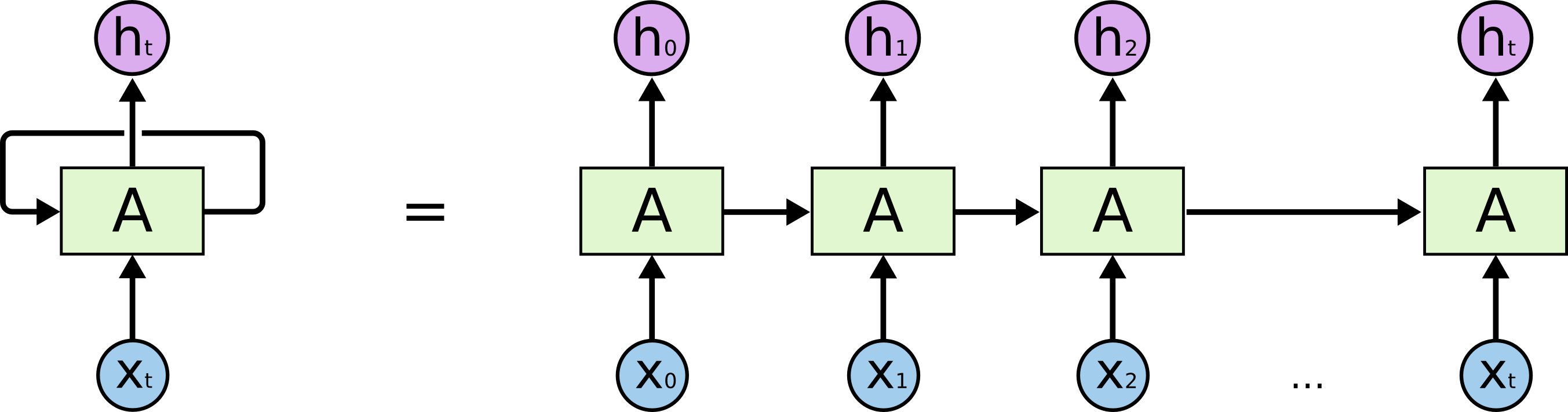

An RNN model is typically used to process long sequential data like video, audio, etc. A simple RNN looks like:

However, simple perceptron neurons that linearly combine the current input element and the last unit state may easily lose the long-term dependencies. Standard RNN cannot bridge more than 5–10 time steps. This is due to that back-propagated error signals tend to either grow or shrink with every time, which makes it struggle to learn. There is where LSTM comes into the picture.

LSTM Networks

LSTMs is the short name of Long Short Term Memory networks that is explicitly designed to avoid the long-term dependency problem.

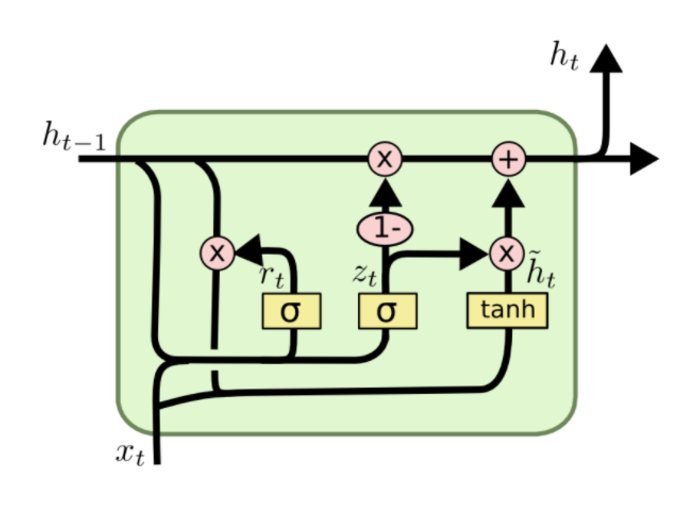

We can see from the above picture that it has multi-neural network layer in each recurrent unit.